Introduction

The ChatGPT API, developed by OpenAI, allows developers to integrate the power of conversational AI into their applications. With its ability to understand and generate human-like text, it opens up a wide range of possibilities, from building chatbots and virtual assistants to generating content and automating workflows.

In this guide, we’ll walk through how to use the ChatGPT API with Python, one of the most popular and versatile programming languages. Whether you’re a beginner or an experienced developer, you’ll learn how to set up the API, make requests, handle responses, and take advantage of the advanced features to create intelligent, responsive applications.

We’ll cover:

- Setting up your Python environment to interact with the API.

- Making your first API call and understanding the response structure.

- Advanced techniques for prompt engineering, controlling output parameters, and maintaining conversation context.

- Real-world use cases such as building a simple chatbot or generating content dynamically.

By the end of this guide, you’ll have a solid understanding of how to integrate ChatGPT with Python, empowering you to build innovative applications with ease.

Prerequisites

Before diving into the process of using the ChatGPT API with Python, there are a few things you need to have in place. This section will cover the basic knowledge, tools, and accounts you’ll need to get started.

1. Basic Python Knowledge

To follow this tutorial, you should have a basic understanding of Python. Specifically, you should be comfortable with:

- Variables, functions, and data types (strings, lists, dictionaries, etc.).

- Making HTTP requests and handling JSON responses.

- Installing and using external libraries via

pip.

If you’re new to Python, consider reviewing some introductory materials or tutorials to familiarize yourself with these concepts before proceeding.

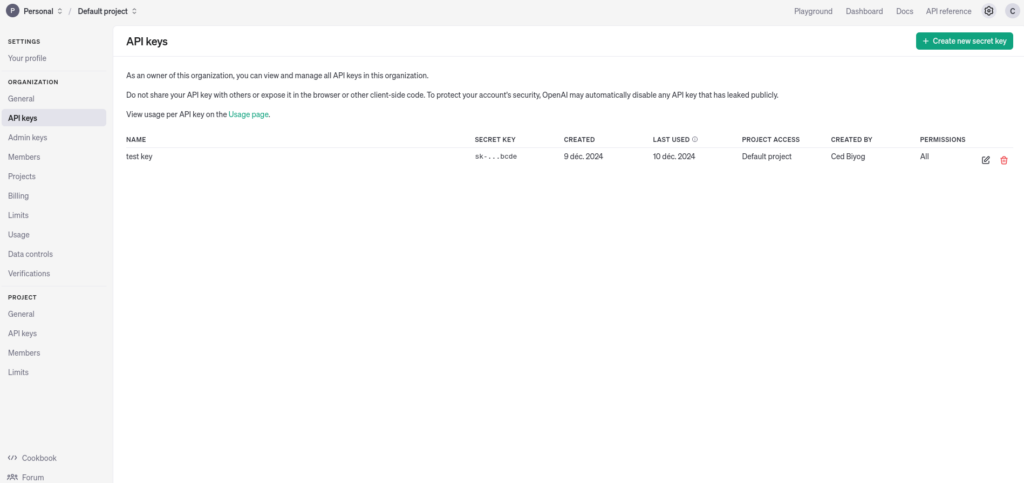

2. OpenAI API Key

To access the ChatGPT API, you’ll need an OpenAI API key. If you don’t have one yet, follow these steps:

- Go to the OpenAI website.

- Sign up for an account or log in if you already have one.

- Navigate to the API section of your account.

- Generate a new API key. You’ll use this key to authenticate your requests to the API.

Important: Keep your API key private. Do not expose it in public code repositories or share it with others.

API key generation

3. Python Libraries

The following Python libraries are required for this tutorial:

openai: The official library for interacting with OpenAI’s API.requests: Whileopenaihandles most of the API communication,requestsis commonly used in Python to make HTTP requests and handle responses.- (Optional)

dotenv: For securely managing your API key in environment variables.

You can install these libraries using pip:

pip install openai requests python-dotenvPython Environment Setup

Creating the project

Start by creating a new project folder, and call it myChatGPTClient for example.

mkdir myChatGPTClient

cd myChatGPTClientCreate a file named app.py, we will write our code in that file.

It’s a good practice to create a virtual environment for your Python project to manage dependencies more easily and avoid conflicts with other projects. If you’re not familiar with setting up a virtual environment, here’s how to do it:

Create a virtual environment

python -m venv myvenvActivate it:

source myenv/bin/activateinstall dependencies: After activating your virtual environment, install the necessary libraries as shown earlier.

API key configuration

To securely connect to the ChatGPT API, you’ll need to configure your API key in a way that avoids exposing it directly in your code. This section will guide you through the process of storing your API key securely and using it within your Python project, we will use environment variables.

Create .env file to store environment variables for the project:

OPENAI_API_KEY=your_api_key_hereInstall python-dotenv library to load variables from the .env file.

pip install python-dotenv

In app.py file, use python-dotenv to load the .env file, and access the API key.

import os

from dotenv import load_dotenv

# Load the .env file

load_dotenv()

# Retrieve the API key from environment variables

api_key = os.getenv("OPENAI_API_KEY")

# Check if the API key was successfully loaded

if not api_key:

raise ValueError("API key not found. Please make sure the .env file exists and contains your API key.")Now, if you want to track your changes with Git, it’s the good moment to initialize it with the following command.

git initTo avoid accidentally committing your API key to version control (e.g., GitHub), make sure to add the .env file to your .gitignore file. This will prevent it from being tracked by Git.

In your .gitignore file, add the following line:

.envMaking Your First API Call

Now that you’ve configured your API key, it’s time to make your first call to the ChatGPT API. In this section, we’ll walk you through sending a basic request to the API and understanding the response.

1. Basic API Call: Asking ChatGPT a Simple Question

We’ll start by asking ChatGPT a simple question, such as “What is the capital of France?” Here’s how you can do it using Python and the OpenAI API:

from openai import OpenAI

from dotenv import load_dotenv

import os

# Load the environment variables

load_dotenv()

# Set the API key

api_key = os.getenv("OPENAI_API_KEY")

# Create an OpenAI client

client = OpenAI(

api_key=api_key)

# Define the question

question = "What is the capital of France?"

# Make a simple API call to ask a question

response = client.chat.completions.create(

model="gpt-3.5-turbo", # Use the GPT-3.5-turbo model

messages=[

{"role": "system", "content": f"{question}"},

], # The question or prompt to ask

max_tokens=512, # Maximum number of tokens to generate

n=1, # Number of completions to generate

stop=None, # Stop sequence

temperature=0.8, # Temperature for sampling

)

answer = response.choices[0].message.content

# Print the response text

print("Response from ChatGPT:", response.choices[0].message.content)

After running the above script, you should see output similar to the following:

Response from ChatGPT: The capital of France is Paris.2. Understanding the API Response

The response object contains a lot of information. Here’s a quick breakdown of the important parts:

response.choices: This is a list containing the model’s responses. Each item in the list represents a potential response. In most cases, it will contain just one item.response.choices[0].message.content: This contains the actual text of the response. It’s the part we’re interested in, as this is what ChatGPT generates in response to your prompt.- Other fields: The response will also contain additional metadata, such as the total number of tokens used, the model used, and more. You can access this information if you need to analyze or log it, but for now, we’re focusing on the text.

3. Handling API Errors

Sometimes things can go wrong when making API calls (e.g., network issues, invalid API keys, or rate limits). Here’s how to handle errors gracefully:

import openai

from openai import OpenAI

from dotenv import load_dotenv

import os

# Load the environment variables

load_dotenv()

# Set the API key

api_key = os.getenv("OPENAI_API_KEY")

# Create an OpenAI client

client = OpenAI(

api_key=api_key)

# Define the question

question = "What is the capital of France?"

try:

# Make a simple API call to ask a question

response = client.chat.completions.create(

model="gpt-3.5-turbo", # Use the GPT-3.5-turbo model

messages=[

{"role": "system", "content": f"{question}"},

], # The question or prompt to ask

max_tokens=512, # Maximum number of tokens to generate

n=1, # Number of completions to generate

stop=None, # Stop sequence

temperature=0.8, # Temperature for sampling

)

answer = response.choices[0].message.content

# Print the response text

print("Response from ChatGPT:", response.choices[0].message.content)

except openai.APIError as e:

# Handle API error here, e.g. retry or log

print(f"OpenAI API returned an API Error: {e}")

pass

except openai.APIConnectionError as e:

# Handle connection error here

print(f"Failed to connect to OpenAI API: {e}")

pass

except openai.RateLimitError as e:

# Handle rate limit error (we recommend using exponential backoff)

print(f"OpenAI API request exceeded rate limit: {e}")

passNext Steps

Congratulations! You’ve just made your first successful API call to ChatGPT. Now, you can start experimenting with more complex prompts, fine-tuning the output with parameters like temperature, max_tokens, and top_p, and building out your own projects.

In the next posts, we’ll dive deeper into more advanced features, such as prompt engineering, and how to control the conversation flow for building interactive applications.